Bamboo Learnings

Some stories about AI, Nvidia, education, and patience.

Once a Chinese bamboo tree breaks through the ground, it will grow 90 feet tall in just five weeks. But before it does, the bamboo tree has to be watered and fertilized, daily, for five years. During this time the roots are being established, and nothing is visible above ground.

The question is did the Chinese bamboo tree grow 90 feet tall in five weeks or in five years? Says Les Brown, the answer is obvious, it grows 90 feet tall in 5 years. If at any time that person stopped watering and fertilizing that tree, it would die in the ground. Some people do not have the patience to wait for the tree to grow, yet many people do. (source)

Deep Learning was already considered a hot thing back in 2015, when I signed up for that class at Stanford; I was surprised when the professor mentioned that these techniques had mostly been developed in the 1980’s, but just “didn’t work” back then. Now when there’s finally enough data and compute power to make it all click, the sexy new name – Deep Learning – makes everyone line up to take this class.

As it turns out, Geoff Hinton, recent Nobel prize winner1, has been working on neural networks – the lynchpin of deep learning and the current AI boom – since he started his PhD in the 1970’s! Yes! Now he is rich and famous, having Google bought his startup for $44M, and having won a Turing award and the Nobel Prize.

AI seems like the next big wave in tech, and Hinton is considered as the Godfather of this revolution2; but for decades – decades! – people told him he was wasting his time. Neural networks are never going to work. Research budgets were trimmed, and the field was going through an “AI Winter”, that lasted until the early 2000’s.

Hinton never lost faith though; he kept improving and refining, until the stars finally aligned. In 2012, AlexNet, named after one of Hinton’s students, did remarkably well in identifying objects in images. Far ahead of any other technique. That sparked a renaissance in deep learning, and pushed its adoption across different industries and applications.

I can’t imagine how I would manage to devote my brain power to something for thirty years, without seeing results. But it was certainly worth it in Hinton’s case. He was right about neural networks. Big time. It just required some patience for this bamboo tree to break through the ground.

My son refused to write when he was in kindergarten. He would just sit in the corner whenever they did any writing activities. Nor was he willing to try writing at home. My wife and I didn’t put pressure on him, just said things like “we believe in you” or “you’ll do it when you’re ready”. We weren’t worried. But, like, also, we kind of were. Like, how long was he going to carry this mental block?

Until one day my son came home from school saying, “daddy I want to practice writing with you”.

I don’t even remember why. Maybe something he saw at school, or in a book. Whatever. One of those random things that shape your child’s life more than most of your well-thought-of plans.

Back at home, my son tried writing his name. I was preparing to take a picture, but changed my mind upon seeing the result. There were lines all over the page, hardly recognizable. But he kept trying and trying, until he was finally able to do a decent job with his name. Then he moved on to writing my name. Then, the names of his friends. Eventually he wrote a whole letter for mommy. It looked pretty good! She couldn’t believe it when she came home that evening.

My son went from not writing at all that morning, to writing a letter in the evening. There was no “linear progression”. For a long time, it was simply zero measurable progress, then, suddenly, a rapid surge upwards.

Similar to the bamboo-type progress that Geoff Hinton had experienced with neural networks.

People feel more comfortable pretending that things move forward at a constant and steady pace.

Except, mostly, that’s not how things work.

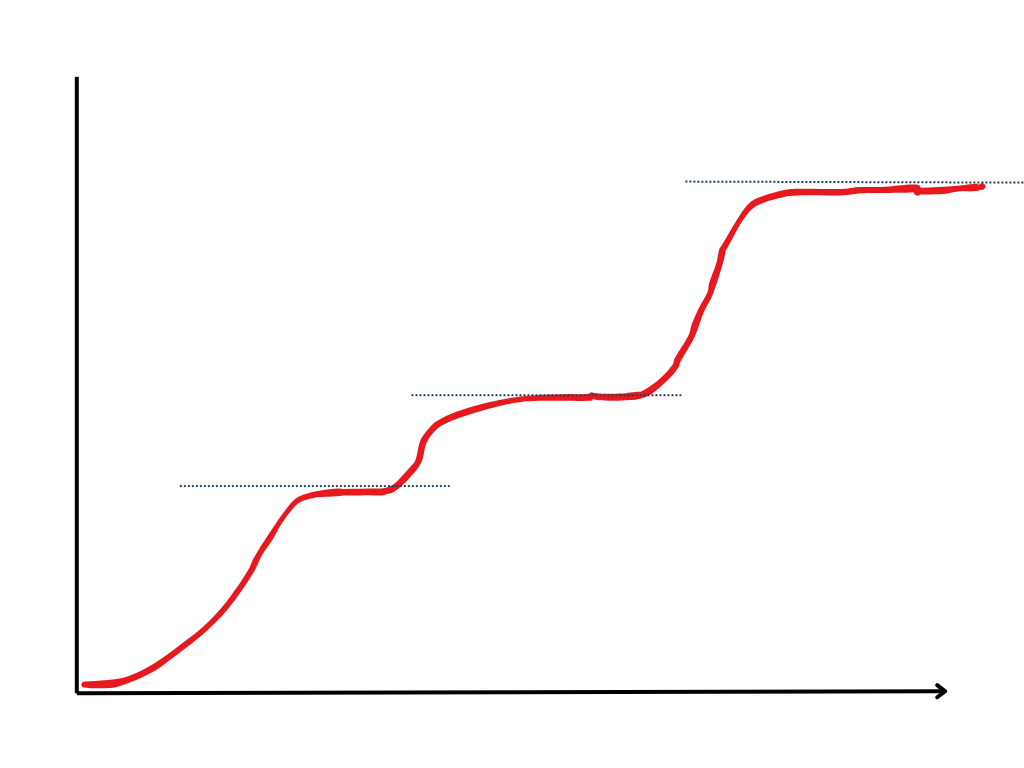

Progress looks more like a series of S-curves than a linear line. Things are stuck for a while. Then there is a mini-breakthrough, and everything blows up (but in a good way). Then progress suddenly stops as it hits a new ceiling. Until the next S-curve.

We never know how long we are going to be stuck rubbing against an asymptote, waiting for the next breakthrough to happen; nor do we know how far ahead we are going to climb when it finally does happen, before we hit the next ceiling.

Nvidia’s stock bottomed in October of 2022, down more than 60% from the previous year’s peak. The Crypto crash, along with the general post-pandemic slowdown, drove down demand for the company’s GPU, just as it was ramping up production. Nvidia had to take a write-down of its inventory, resulting in terrible financial statements. The stock responded accordingly.

A month later, ChatGPT came out, and took the world by storm.

Generative AI is the real deal. The entire tech industry is reorienting itself around it. And what do they all need? That’s right, Graphical Processing Units, the ones made by Nvidia.

Suddenly Nvidia can’t produce enough of its GPUs to satisfy demand.

Speaking of - these GPUs were an essential part of what enabled the breakthrough in Geoff Hinton’s research. ChatGPT was built by some of Hinton’s former students. Funny how these things work, ha?

Anyways, guess what happened to Nvidia’s stock? Since the October 2022 bottom, it has gone up – not 2x, not 4x , but 12 times! Revenues have quadrupled(!) within two years. Nvidia is now worth over three trillion dollars, at the top of the market, alongside Apple and Microsoft.

No financial analyst, though, would have ever modeled this phenomenal spike. No one could have predicted ChatGPT and its impact. Just before it launched, Nvidia itself had written off its GPU inventory!

Now that the AI is surging, could anyone model how long this wave would last? Doomers are comparing it to the 1990’s internet bubble, warning that expectations are too high and that Nvidia’s stock might collapse. And they very well may turn out to be correct. Or it may double again from here.

That’s the thing with these S-Curve style progress. For a while, nothing happens. Then, a breakthrough, and everything is happening all at once. You don’t know how far you’re going to get; had I extrapolated based on my son’s progress in that magical afternoon, I might have concluded he would win the Nobel Prize by the time he is in fourth grade.

Rory Sutherland, an advertising expert, has this great saying: success in business is highly messy, non-linear and non-directional; yet most of the effort is devoted to pretending that’s not true.

Well, what if we stopped pretending, and just embraced the messy nature of progress?

Instead of forcing our kids’ education, our careers, or our business to fit the pace of a conveyor belt – and experience a great deal of frustration along the way – we should take the approach of the bamboo farmer.

Plant the seeds, keep watering and fertilizing, be patient, and one day – they just grow ninty feet tall. Out of nowhere. You just have to be patient, as you wait for the next breakthrough. Or the next Bamboo Leap, if you will.

It’s worth noting there is a controversy around Hinton’s winning the Nobel Prize in Physics: https://fortune.com/2024/10/10/controversy-ai-pioneer-geoffrey-hinton-nobel-prize-tech/

It should be noted that Hinton has left Google in 2023, and expressed concerns for the potential implications of AI.